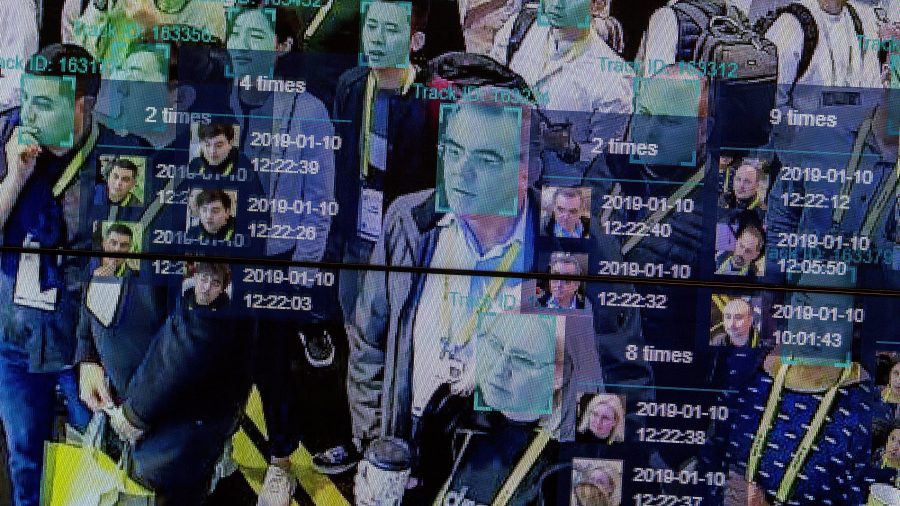

How facial-recognition technology can lead to wrongful arrests

Facial-recognition software is leading to wrongful arrests, but the secrecy around the use of the technology makes it hard to know just how often it happens.

So far, there are at least five known cases in which police use of facial-recognition algorithms have led to mistaken-identity arrests in the United States. All five were Black men.

Nate Freed Wessler is part of the team representing one of those men in a case against the Detroit Police Department. He’s also a deputy director of the American Civil Liberties Union’s Speech, Privacy, and Technology project.

Marketplace’s Meghan McCarty Carino spoke with Wessler about facial-recognition technology and why it leads to these outcomes.

Below is an edited transcript of their conversation.

Nate Freed Wessler: This technology is a black-box algorithmic artificial intelligence technology. It’s far from perfect even in ideal test conditions, and when police are using it, it’s far from ideal. When police use this technology, they’re feeding in all kinds of photos with different qualities — photos from surveillance cameras or security cameras, where it might be shadowy, someone’s face might be partly obscured by a hat or sunglasses. All of those issues with photo quality lower the reliability of the result from the algorithm. In other words, there’s a higher chance of a false match.

Additionally, we now know of five cases around the country where Black men were falsely arrested for crimes they had nothing to do with because police relied on incorrect matches by this technology. I’m part of the legal team that represents one of those men. His name is Robert Williams, he lives in the Detroit area in Michigan. He was arrested in his front yard in front of his two very young daughters and his wife. Detroit police held him in jail for 30 hours and interrogated for a shoplifting crime that he had nothing to do with. In fact, he was driving home from work miles away outside the city at the time of this incident. Yet police took the result of a face-recognition search that incorrectly identified him and relied on it to get an arrest warrant.

Meghan McCarty Carino: Given the fact that it’s not usually divulged when this technology is being used in an arrest, I could imagine there might be more mistakes being made that haven’t come to light.

Wessler: That’s exactly right. We strongly suspect there are many more. In order to figure out that this technology was used against you, the police have to tell you, and if police are hiding this information from judges and from defense attorneys, then we really have no way to know how pervasive these false arrests are. And this technology is dangerous when it makes mistakes, like the false arrest problem, but it’s also dangerous when it can correctly identify people. The real nightmare scenario is police taking this technology and hooking it up to networks of surveillance cameras, so that they could instantaneously identify anyone who walks across the frame of a camera to be able to track our movements as we go about our daily lives.

McCarty Carino: What are some of the ways that bias seeps into this technology?

Wessler: These algorithms have been tested by the National Institute of Standards and Technology and by numerous independent researchers, who have found that facial-recognition algorithms have significantly higher rates of false identifications when used to try to identify people of color, particularly Black people.

That’s partly because the algorithms are trained by feeding them huge sets of pairs of different photos of the same face, and those training data sets historically have been very disproportionately composed of faces of white people, which means the algorithms are better at identifying white people than people of color. There are also problems because of issues in the default color contrast settings in digital cameras, which are optimized for lighter-skinned people. That means that details of the faces of darker-skinned people are often less discernible, so there’s less data for the systems to process and it means there’s a higher rate of false identifications of darker-skinned people.

Additionally, there’s lots of research about humans having a lesser ability to identify people from other racial groups. So, if it’s a white police employee trying to figure out the computer’s purported match to a Black suspect, they’re going to have a higher chance of getting it wrong themselves. They’re not going to correct the error; they’re going to make it worse.

On top of that, we have all the biases that are already baked into our criminal-enforcement system in this country. When local police departments buy access to a face-recognition algorithm, they are generally using that system to try to match photos of people they want to identify against their own photo database. What photo database does a local police department have? Usually mugshot arrest photos. Well, we know that police departments around the country disproportionately overpolice in communities of color, so those mugshot databases are going to be disproportionately composed of Black and brown people, which then means that you have a matching database that’s not representative of the general population. That raises the prospect of both misidentifying people and in particular, misidentifying people of color. This technology, far from making things better, is just making things worse. Police should walk away from it, and our lawmakers should make them walk away from it.

McCarty Carino: Is there anything that could be done, whether it be improvements to the technology or greater transparency around it, that would make you comfortable with its use by police?

Wessler: Fundamentally, this technology just doesn’t have a place in American policing. Over time, researchers have indicated that the accuracy of some of the algorithms has inched up, but even that is not going to fix the problem of false arrests and wrongful investigations of people because the systems will always make mistakes in real world conditions.

Once a system has misidentified someone, we’re relying on police to correct the error, but there’s extensive research by social scientists and psychologists that shows human cognitive bias toward believing the results of computer systems. People just have an inherent, deeply programmed proclivity to believe algorithms and the results that they spit out. So, it is very hard to come up with systems that will stop police from inherently believing that a facial recognition match must be right and then structuring their investigation around that. That’s always going to be a problem, and this technology is not making it better. It’s making it worse.

Related links: More insight from Meghan McCarty Carino

That Michigan case that Wessler is working, where he’s representing Robert Williams, is still underway. In our conversation, Wessler told me that, in addition to seeking justice for his client, he hopes the case will set a new legal precedent about the use of facial-recognition technology by law-enforcement agencies.

In the meantime, this type of misidentification continues to happen.

Last month, The New York Times reported on the case of Randal Reid, a Georgia man who was arrested in November and accused of using stolen credit cards to buy designer purses in Louisiana — a state he’d never even been to. Reid spent nearly a week in jail and thousands of dollars on legal fees.

The Times reported that facial-recognition technology was used to identify — or misidentify — Reid. But none of the official court documents used to arrest him disclosed that information.

A 2022 study by the Pew Research Center found that more Americans view the use of facial-recognition technology by police as a good idea than the number who see it as a bad idea or said they weren’t sure.

But Black Americans were less likely than other groups to view the technology in a positive light.

The future of this podcast starts with you.

Every day, the “Marketplace Tech” team demystifies the digital economy with stories that explore more than just Big Tech. We’re committed to covering topics that matter to you and the world around us, diving deep into how technology intersects with climate change, inequity, and disinformation.

As part of a nonprofit newsroom, we’re counting on listeners like you to keep this public service paywall-free and available to all.

Support “Marketplace Tech” in any amount today and become a partner in our mission.