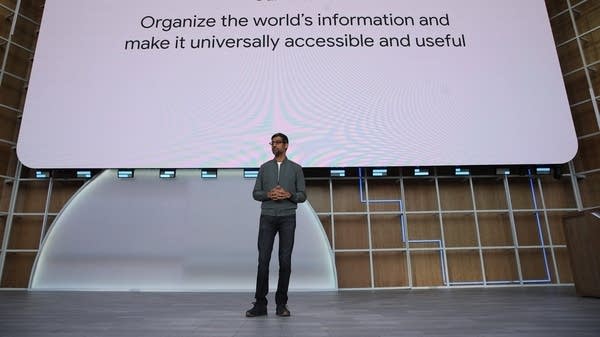

Google executive James Manyika emphasizes the benefits of artificial intelligence projects and points to the “collective effort” needed to mitigate the risks.

Google is bringing artificial intelligence to … like, everything.

Last week, the company announced updates to its Bard chatbot and integrations into search, productivity tools, health care services and more.

But plenty of people are calling for more caution with this technology, from the thousands of tech and science experts who signed an open letter calling for a pause in AI development to renowned former Google employee Geoffrey Hinton, a computer scientist whom many consider the “godfather” of AI.

Hinton recently left the company. Though he said Google “has acted very responsibly” when it comes to AI, he sought the freedom to “talk about the dangers of AI without considering how this impacts Google.”

Marketplace’s Meghan McCarty Carino asked James Manyika, Google’s senior vice president of technology and society, about how the company is balancing concerns about the risks AI poses with its plans to advance the technology and develop applications for it.

The following is an edited transcript of their conversation.

James Manyika: Think about all the benefits to people today, never mind in the future. So for example, we now provide flood alerts to 20 countries covering 350 million people. That’s a lot of people benefiting from the capabilities. Think about the groundbreaking progress we’ve done in the life sciences with AlphaFold that’s going to benefit drug discovery [and] therapy. So I think the benefit side is so incredibly compelling. At the same time, we do have to take responsibility pretty seriously. And so we’re investing an enormous amount in trying to be responsible and trying to move when we think these capabilities are ready. I understand the sentiment and concerns that were indicated by people asking for the pause. I share many of those concerns. But it’s not clear to me that that’s the way to address them. But, you know, the concerns are quite important. And we have to take them seriously.

Meghan McCarty Carino: I guess it’s not just the benefits of this technology that might be driving the pace. There’s also a commercial competition. You know, Google has some fierce competition from Microsoft and OpenAI that is pushing ahead. I mean, is a race like this conducive to responsibility?

Manyika: Well, I don’t know about race. I mean, I think we’re proceeding at our pace. It’s always interesting to me that half the world thinks Google is moving too slowly and is being too conservative and not putting things out as quickly. And the other half thinks we’re moving too fast. I think there’s a healthy tension there because it reflects this idea [that] we’re trying to be bold and responsible. I think we’re kind of going at a pace that feels about right to us and we’ll course correct as we go because we’re learning a lot. We’re learning from users. We’re learning from feedback. But we’re still compelled by this idea that, you know, we want to do both things — be bold and be responsible. And we like the tension. We think it’s a very productive tension for us.

McCarty Carino: Google in the past has been lauded for establishing a very robust AI ethics team. But over the recent years, that team has been kind of dogged by conflict and controversy. There has been a succession of high-profile departures on bad terms, you know, widespread reporting of internal concerns being raised about products being released quickly. How can the public trust that these products are safe, given this kind of disagreement even within the company?

Manyika: One of the things I love about Google and the Google culture is the incredible amount of debate we have amongst ourselves. And I think the pace at which we’re developing these technologies surely, I think, speaks for itself. We’re learning a lot, but boy do we have a lot of debate and discussion about it. In fact, people think we’re moving too — inside Google, by the way — think we’re moving too fast. Half the people think we’re moving too slowly. I think that’s a healthy tension and healthy environment. We keep learning things, and we’re going to keep learning things. You know, we were the first company to actually put out a set of AI ethics principles in 2018. And we publish every year since then a progress report where we transparently describe how we’re doing, where we’re making progress, where we still have work to do. Now, do we always get it right, every single time? No. Do we learn from it? Absolutely. So that’s, I think, part of what we’re trying to do.

McCarty Carino: Some of the harms that we have talked about, things like bias, misinformation, these things can, to a certain extent, I think be tested for and tweaked in development. But when we think about some of the bigger societal harms that are being contemplated with artificial intelligence, things like massive job displacement or breakdown of social trust, these seem like problems that are hard for a company like Google to, you know, solve or address on its own. So what needs to happen there, you know, if we take as an assumption that companies like Google do have a moral and ethical responsibility to mitigate those kinds of harms?

Manyika: Well, I think these are incredibly important questions that we’re going to all need to grapple with. And it’s part of the reason, Meghan, why this has to be a collective effort. I mean, you mentioned the question of jobs, which happens to be, it’s a topic that I’ve spent an enormous amount of time with colleagues both at Google and outside in academia researching. The way you might summarize the jobs question is there are going to be jobs lost, there will be jobs gained and a whole lot more jobs that will be changed. I think we’ll see all three things happening at the same time. But if you look at much of that research for the foreseeable future, I don’t think there’s going to be a shortage of jobs. But there’ll be some real important things that we collectively will need to grapple with. We know we’re going to need to grapple with, you know, skills and reskilling and adaptation as people work alongside these incredibly powerful technologies. And we’re also going to need to think about how do we make sure we’re, you know, we’re educating people well and learning well in order to take advantage of the possibilities of these technologies? These are real issues that we’re going to need to work on and grapple with. And I think that’s not just a job for Google, although we’re trying to make, you know, play our part and we’re doing a lot of these skills training and digital certificates. And, you know, that have benefited many people around the country in multiple states. But these are collective efforts involving us, other companies, other employers, but also the governments.

McCarty Carino: And do you think that this technology could have a role to play in reducing inequality, particularly global inequality, which you have spent much of your career working on?

Manyika: Absolutely. I mean, think about people who’ve never had access to the world’s information. Think about people who haven’t had the opportunity, who have great ideas, and haven’t had the opportunity to go to coding school, be able to code, but now they can speak to Bard, in [their] natural language and Bard can turn that into code. You know, there’s something like 7,000 languages spoken in the world, if you wanted to cover over 90% of the human population. Right now, we can only translate [over] 100. With these AI technologies, we now have an initiative at Google to get to 1,000 languages. Think about the amount of information access when people can access information in their own language through speech, text, and that’s incredibly uplifting for a huge portion of society. So I think those possibilities are enormous for people everywhere.

You can read or listen to Part 1 of our interview with James Manyika, focusing on some of the specifics of the AI reveals from the Google conference last week and how the company is creating tools to combat AI misuse by bad actors.

It’s a prospect that Apple co-founder Steve Wozniak expressed concerns about in a recent interview with the BBC.

He, along with Elon Musk and thousands of others, signed that open letter I mentioned earlier that called for a pause. In the interview, Wozniak warned the breakneck pace of AI development would likely worsen issues with deepfakes and misinformation.